SaaS Application to AWS S3 Made Easy Via AppFlow

Introduction

There is an overwhelming demand for businesses to make data driven decisions, understand their customer base through advanced analytics, and forecast future outcomes with ML. Companies are often faced with one common issue when striving to achieve these goals - data silos. Many are working towards breaking down the silos distributed across several SaaS applications, on-premise sources, and cloud resources. It typically takes customers many months to build custom connectors that meet the top data integration requirements including batch and event driven data processing, data synchronization across several apps, and secure data access. Once the connectors are built, managing the integration code tailored to each application and adapting to increased scaling needs can be quite difficult and expensive.

Amazon Appflow directly addresses these issues. Appflow is a low to no code, fully managed data integration service that enables you to exchange data quickly, safely, and at scale in response to events or on a schedule. It integrates with a variety of SaaS applications including Salesforce, Marketo, DataDog, DynaTrace, and many more. It enables customers to implement a range of common integration tasks without dedicating months to reading the API documentation and perfecting underlying code.

Some common use cases for AppFlow include:

Ingesting Salesforce data into an Amazon S3 data lake or AWS Redshift table to be presented via AWS Quicksight

Loading and Analyzing logs from on premise servers to identify and address recurring performance issues

Enriching and cleaning data sets via SageMaker by loading data from several SaaS applications

Syncing data between SAP applications and an S3 data lake using Appflow’s bidirectional capabilities via PrivateLink

Whether you find yourself in a position where you need to combine data from several SaaS app quickly into AWS, you need to move your data into AWS, or you’d like to test out AWS services with your data set, Amazon’s Appflow simplifies the process every step of the way.

Let’s dive a little deeper into Appflow’s capabilities and considerations.

One of the biggest challenges with adding a new data integration tool into your data pipeline is the amount of time it takes to install, configure, and test the tool. Appflow has a very user-friendly point-and-click user interface. It requires minimal coding experience if any at all. The simple UI enables you to set up a secure data pipeline set up in a matter of minutes.

When it comes to data processing, Appflow can run up to 100 GB of data per flow. The tool also supports bi-directional data transfer between SAP systems and AWS with the pre-built connectors. Appflow uses a highly available architecture with redundant resources intended to prevent any single points of failure.

How much does it cost?

There is no upfront cost to use Appflow. Customers only pay for the number of flows they run and the volume of data processed. When using Appflow, customers do not get charged the usual AWS Data transfer fees. Within the US East North Virginia region, the cost is $0.02 per GB of data processed for flows where the target is hosted on AWS or where the target is integrated with AWS PrivateLink. The charge for flows with destinations that do not integrate with PrivateLink and are not within AWS is $0.10 per GB of data. Any additional costs pertain to storage or key usage. It’s important to note that there may be an additional charge for third party applications that require a license.

Is it secure?

Security is a key component when setting up a new pipeline. By default, all the sensitive connection information including credentials and authentication tokens is encrypted with AWS Key Management Service (KMS) and stored in AWS Secrets Manager. Only users with proper Identity Access Management (IAM) permissions will be able to access the secrets manager. AppFlow automatically secures all data in transit using TLS 1.2. All metadata associated with a connection is deleted when the connection itself is deleted. When using S3 as a destination, AWS S3 SSE-KMS allows you to encrypt all data at rest.

There are several SaaS applications that support PrivateLink connections, allowing the use AWS’s backbone network. With the private link option selected, the transferred data would never be exposed over the public internet, adding another layer of security.

Now that we’ve walked through the features and benefits of AppFlow, let’s look at how easy it is to configure a connector.

How does it work?

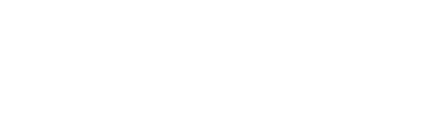

Set up a new flow. Create a new name for the flow, select the source connector.

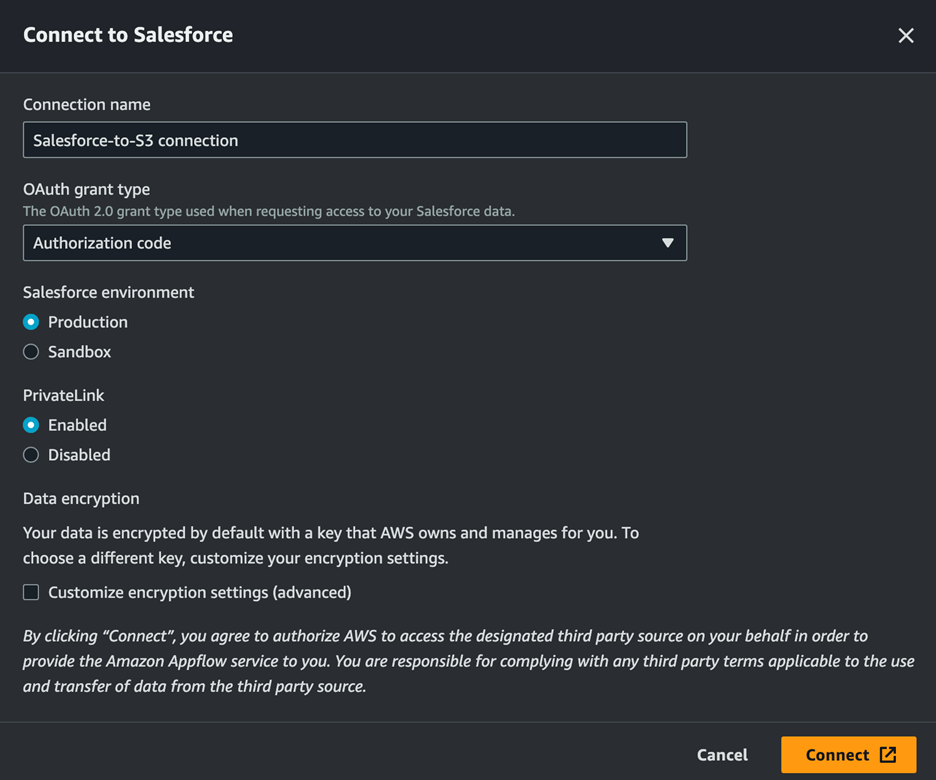

2. Create a connection for the source.

Name the connection, select the authorization type, enable PrivateLink if the SaaS app integrates with the service, and press the orange connect button. This will prompt a pop up that allows you to log in to the SaaS app to begin provisioning the connection. The connection will also show up in the Salesforce console under “Private Connect” settings. Select the connection and hit the provision button. Salesforce may require a license to use Private Connect.

3. Create a connection for your destination.

The data will be loaded into S3 for this example. Select your bucket name and create a prefix. If you want to store the metadata in the AWS Glue Data Catalog, those settings can be configured at this step as well. Select the desired format for the data and whether a timestamp on the file name is preferred. Hit the orange next button to finish setting up the connection.

4. Determine the flow trigger. It can either run on demand, on a set schedule, or based on an event.

5. Map data fields as needed. Add any filters, calculations, or validations required.

6. Review all flow details, hit create flow, and then select the start workflow option. You will see a green pop up with the details of the flow.

From this point on, you can verify that your data is loaded in the destination by navigating to the destination directly.

Voila! You now have created a workflow to ingest, transform, and load data from your SaaS applications into the AWS environment. It’s really that simple.

AppFlow enables you to rapidly set up a cost effective, reliable, secure, scalable data pipeline in minutes without writing any code. It allows organizations to provide their teams with data they need to gain valuable insight and make data driven decisions. If you’d like some additional guidance or expertise, don’t hesitate to reach out to our team of AWS professionals at PMSquare.

Next Steps

If you’re interested in receiving some guidance or additional help with AWS AppFlow, send a message our way! We hope you found this article to be informative. Be sure to subscribe to our newsletter for PMsquare original articles, updates, and insights delivered directly to your inbox.