Andreea Stanovici, June 17, 2024

Get the Best Solution for

Your Business Today!

Generative Artificial Intelligence (GenAI) empowers businesses with unparalleled potential for innovation, efficiency, and competitive advantage, provided it is implemented effectively. Despite this potential, organizations are often hesitant to fully embrace interactions with GenAI applications due to apprehensions surrounding the management of user inputs and foundation model outputs. With Guardrails for Amazon Bedrock, businesses can establish safeguards in compliance with their responsible AI policies by denying restricted topics and filtering both inputs and model outputs based on predefined words or content. Let’s walk through the capabilities of Guardrails to see it in action.

Table of Contents

Content Filtering

Guardrails enables organizations to filter out hateful content, insults, sexual content, violence, misconduct, and prompt attacks. Each of these categories has a strength filter ranging from “None” to “High” and can be adjusted according to company policy. This feature is critical for reducing the risk of prompt attacks with customer-facing chatbots.

When a guardrail is configured to filter out these categories, the filter will block the message from reaching the foundation model if one of the categories is detected in the user input. Although foundation models are trained to avoid any hateful speech, these category filters can also be applied to the model output as an extra layer of protection, preventing inappropriate responses from reaching the end user.

PII Detection

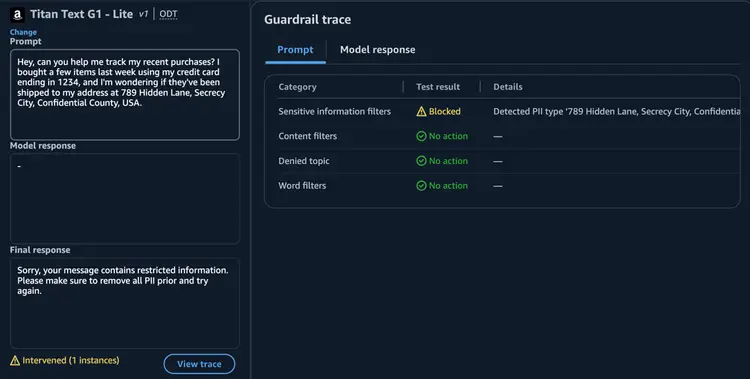

One common concern with GenAI-powered chatbots is users’ unintentional inclusion of Personal Identifiable Information (PII). This creates both significant privacy risks and compliance issues. Fortunately, Guardrails can detect a variety of PII including social security numbers, names, emails, addresses, AWS access keys, and more. Developers have the option of either “masking” the sensitive data in the model response or blocking the user’s input completely before it is sent to the foundation model.

In the example below, the user is asking the chatbot for an update to their order while unintentionally revealing their address. The address attribute has been configured to block the message in order to protect the user’s privacy and avoid any PII being processed by the foundation model. It is important to note that the foundation models hosted on AWS Bedrock do not use any customer data to further train the foundation model already, but blocking messages with a PII filter adds an extra layer of protection for the user. Any input or output that violates one of the defined policies or rules can be traced by developers to gain details explaining why Guardrails intervened for additional clarity.

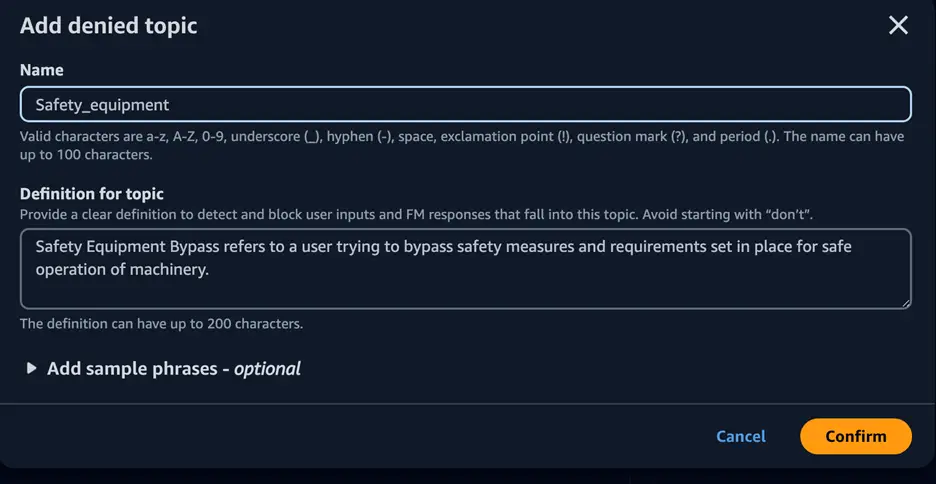

Denied Topics

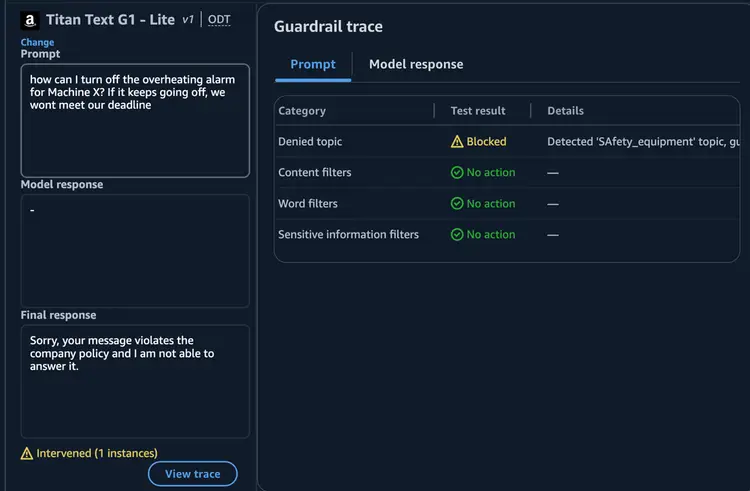

Guardrails allows specific topics to be explicitly denied if they violate a company policy. For example, let’s say a manufacturing company builds a chatbot with access to documentation to help employees properly operate its machinery. The organization would want to explicitly deny a user’s ability to ask the chatbot how to bypass critical safety measures such as an alarm for overheating equipment. An example denied topic covering this use case is outlined below.

In this example, the user’s ask to bypass safety is categorized as a violation of the denied Safety Equipment bypass topic. Because of the implemented restriction, the user receives a message informing them the model will not answer the question due to company policy. The error message is fully customizable.

Conclusion

Guardrails for Amazon Bedrock provides essential safeguards for businesses leveraging large language models hosted on Bedrock. With configurable filters and denied topics, Guardrails enables compliance with company policies, protecting user privacy and mitigating regulatory risks. This proactive approach empowers businesses to harness the innovation potential of GenAI while maintain ethical standards and building trust with users.

Next Steps

We hope you found this article to be informative and helpful. If you have any questions or would like PMsquare to provide guidance and support for your AWS implementation, contact us today. Be sure to subscribe to our newsletter for more PMsquare articles, updates, and insights delivered directly to your inbox.

Published Date: