Mike DeGeus, November 8, 2021

Get the Best Solution for

Your Business Today!

If you’re tuned in to trends and thought leadership in the world of data and analytics, you may have caught wind of the phrase “data mesh”. This article seeks to explain the concepts of data mesh in a succinct manner to those who have been immersed for some time in the realm of data and business intelligence/business analytics but haven’t yet learned enough to decide whether data mesh is just a trendy catch-phrase or a substantive new approach that can significantly improve the business value derived from data. (We firmly believe it’s the latter.)

Table of Contents

If already are well versed in data mesh foundational concepts, keep an eye out for a subsequent post later this year that will look at how to begin to apply some data mesh principles in your organization. Or, check out the presentation I recently did with my colleague Ryan which covers the same ground as this article as well as some application ideas.

What is Data Mesh?

Well, here’s a definition:

An intentionally designed distributed data architecture, under centralized governance and standardization for interoperability, enabled by a shared and harmonized self-serve data infrastructure

Crystal clear? Probably not, so let’s take a closer look. The best way to understand Data Mesh is to under the core principles. But before we do that, it’s helpful to understand where the idea of Data Mesh came from to start with.

The inventor of Data Mesh is Zhamak Dehghani. She coined the phrase when she published her first article on this topic in May of 2019. In that article, she reviewed the “two planes” of data:

- Operational plane – this is the data that exists as the back end for operational software and systems

- Analytical plane – data that is used for analytical purposes (enterprise reporting, self-service dashboarding, data science, etc.)

Of course, the venerable “ETL” process we all know so well connects the two planes.

She observed that the operational world has now long conformed as a best practice to “domain-driven design” concepts. Essentially, that means that the software itself is designed to align as closely as possible to the business domain it supports. A well-known example of this is the microservices architecture.

The analytical plane, on the other hand, for some reason manifests in organizations consistently as a monolithic architecture. In other words, data flows from various points throughout the organization into a central repository (through ETL processes). Zhamak’s big idea was that the same domain-driven design concepts that have yielded results in the operational plane should also be applied to the analytical plane.

The 4 Principles of Data Mesh

Equipped with a bit of background, we’re ready to look at the four principles of Data Mesh

Domain-oriented decentralized data ownership & architecture

What is a domain, anyway? It’s essentially it’s an area, a function, or a “slice” of the business. The challenge is defining exactly what constitutes a domain within a particular organization. One way to approach that challenge is to start with source systems. (Interesting note: Zhamak calls source systems “systems of reality” since the datasets from those systems have not been transformed or “cleaned” in any way—they directly reflect what happened in the business.) From a data standpoint, a domain could be defined by piecing together which source systems need to be combined in order to form a cohesive domain dataset. These combine to form a “source domain dataset”. These eventually will be transformed into “consumer-aligned datasets” to fit the needs of users.

Once a domain is defined, the data mesh principle of data ownership within that domain can be applied. This means that two key roles exist within the domain:

The Data Product Owner is responsible for the vision and roadmap for data “products” (more on data as a product in a minute). This role focuses on customer satisfaction—in other words, are those who are consuming the data happy with what they are getting and how they are getting it? In order to do this, they must have a deep understanding of who the users are, how they use the data, and how they will access it. This likely means understanding multiple user personas; for instance, a data analyst and data scientist likely have very different needs.

The Data Engineer role allows for the development of a data product within the domain. This role takes direction from the data product owner and is able to execute upon it through their competency in both data engineering tools (ETL solutions like Informatica, Datastage, AWS Glue, etc.) as well as software engineering best practices. The idea here is that the data engineer has knowledge of domain-driven design from the operational world, and can therefore apply those principles to producing analytical data products.

Data as a Product

Again, it helps to start by defining our terms. What is a product? You might think of it as “something you can buy”, but conceptually it’s an intersection between users, business, and technology. More specifically, a product is the result of a process between users and a business, with technology acting as a bridge between the two.

In order to treat data as a product, we need to employ “product thinking”. Product thinking acknowledges the existence of a “problem space” (what users need) as well as a “solution space” (what business can give). It seeks to reduce the gap between users and the business through a singular focus on solving problems.

This sounds pretty simple and perhaps even common sense. However, the reality is that in the real world something different often happens. For instance, businesses frequently start with solutions. They’ve built something, and so when they talk to users/customers, they simply try to convince them that what they have already built will solve the users’ problems. Similarly, businesses will think and communicate in product “features”—cool things the product does which may or may not actually tie back to any problem that the user has.

Okay, so getting back to data. As mentioned above when discussing the Data Product Owner role, customers being served with an analytical data product are usually various types of analysts and data scientists. Therefore, the domain owners must ensure that they understand the problems that these customers face and develop solutions that delight their customers! These solutions are “data products”.

Data Mesh goes one step further and prescribes specific capabilities that should be part of any data product:

It’s beyond the scope of this post to explain each of these—instead, visit Zhamak’s original post for details. In a future article here, we will look at some ideas about how these capabilities map to capabilities of software you might already have for delivering data products.

Self-serve data infrastructure as a platform

This one is fairly simple to understand. The idea here is that there should be an underlying infrastructure for data products that can be easily provisioned by the various domains in an organization so that they can quickly get to the work of creating the data products. The domains should not have to worry about the underlying complexity of servers, operating systems, networking, etc. Furthermore, the infrastructure should include things like encryption, data product versioning, data schema, and automation.

The cloud is the recommended method for making this self-service infrastructure available so that the domains can quickly be dropped into the data product “developer experience” where they can get going on the development of the data product. Since the platform is made available from a central body, there is a certain amount of data mesh “supervision” (versioning, automation, etc.) that is baked into the infrastructure.

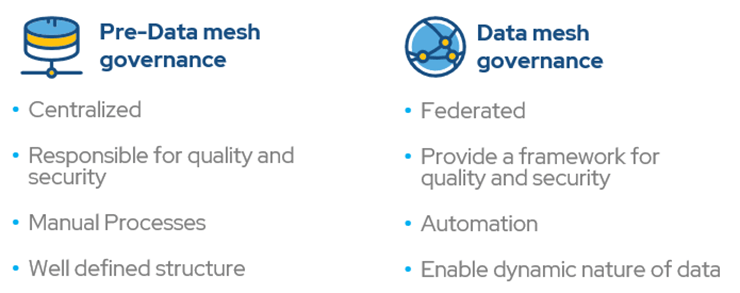

Federated computational governance

The idea of governance for data is certainly nothing new, but Data Mesh proposes a paradigm shift. At a high level, the most significant distinction is to govern in more of a “federated” manner rather than truly centralizing governance. This means that any central governing body is really just providing a framework or guardrails in terms of quality and security, but true responsibility for quality and security lies within the domains. There should also be a focus on automation rather than manual processes (although exactly how to automate governance seems like a significant challenge for which there doesn’t appear to be a specific prescription—though the book on Data mesh is still being written).

Finally, pre-data mesh governance often specified a well-defined structure for data. The venerable data warehouse is the prime example of this. Data mesh more readily acknowledges the dynamic nature of data and allows for domains to designate the structures that are most suitable for their data products.

If you want to dive deeper into federated computational governance, check out the section on the topic in Zhamak’s more recent Data Mesh article.

Conclusion

As you’ve hopefully gathered from reading this post, data mesh in many ways represents a completely new approach to data. While it certainly is prescriptive in many ways about how technology should be leveraged to implement data mesh principles, perhaps the bigger implementation challenge is the organizational/cultural changes that are needed in order to implement. Overcoming the inertia of decades of centralized, monolithic architecture will not be easy for most companies.

Nevertheless, we think that the four principles of data mesh address significant issues that have long plagued data and analytics applications, and therefore there is real value in thinking about them and gleaning what we can—regardless of whether your organization ever goes “full data mesh”. In an upcoming post, we’ll discuss this application further—in the meantime, comment or contact us to let us know your thoughts or ask questions.

Next Steps

We hope you found this article informative. Be sure to subscribe to our newsletter for data and analytics news, updates, and insights delivered directly to your inbox.

If you have any questions or would like PMsquare to provide guidance and support for your analytics solution, contact us today.